Translating EPUB Ebooks with Local LLM and for FREE. An Effective Approach

Translating an entire ebook is more than just converting text; it involves handling complex structures like formatting, code, images, and managing book length. This guide explores how to achieve this for free using local Large Language Models (LLMs).

Challenges in Ebook Translation

Directly feeding an entire EPUB to an LLM is impractical. Key challenges include:

- Book Length: LLMs have context window limits, requiring text to be split into manageable chunks.

- Markup and Formatting: EPUBs contain HTML/XML markup that needs careful handling to preserve structure and readability.

- Code and Formulas: Technical content requires specialized translation to maintain accuracy.

- Images and Links: These elements need to be managed or replaced appropriately.

- Resource Constraints: Free tiers of cloud platforms like Colab often have timeouts or usage limits, making large-scale translation difficult.

Recommended Tool: TranslateBookWithLLM

For a streamlined workflow, I recommend the TranslateBookWithLLM tool. It simplifies the process by handling EPUB unpacking, text chunking, and interaction with LLM APIs. Its command-line interface is straightforward, and it even offers an optional web UI for a more interactive experience.

[Link to TranslateBookWithLLM: https://github.com/hydropix/TranslateBookWithLLM]

Choosing Your LLM

When aiming for free translation, local LLMs are your best bet to avoid API rate limits and costs. Here are some options:

- Free Colab Tier: A viable starting point, but be mindful of session timeouts and usage caps.

- Local Setup (Ollama or llama.cpp): For more robust and consistent translation, setting up a local LLM is ideal.

- Ollama: https://ollama.com/ - Simplifies running LLMs locally.

- llama.cpp: https://github.com/ggerganov/llama.cpp - A C/C++ implementation for running LLMs efficiently on various hardware.

Model Selection

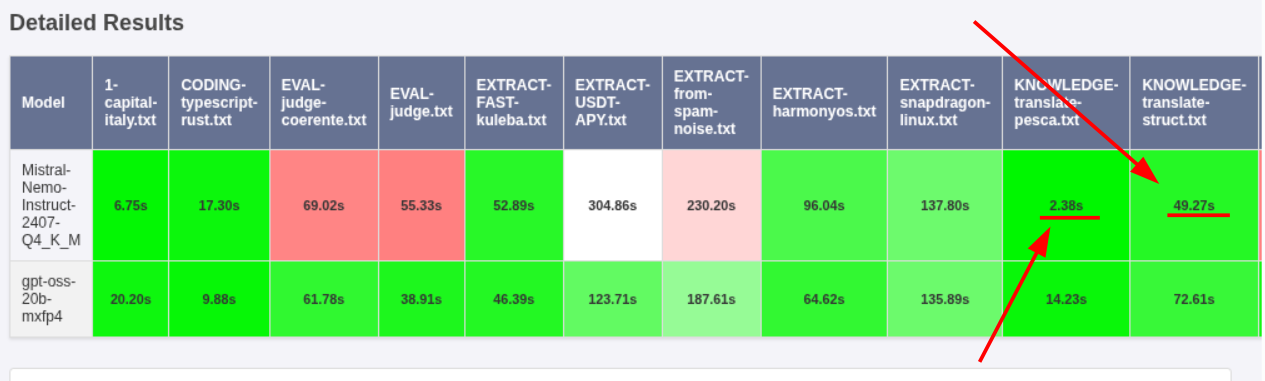

gpt-oss 20B: While capable, it can be prone to timeouts on Colab with lengthy books.mistral-nemo: An older but excellent choice for structured translations. It's fast and handles complex formatting well, making it a reliable option for book translation tasks.

mistral-nemo is less smart than gpt-oss but in the traslation test and structured translation test it is correct and at least 2x faster than gpt-oss. Comparison on LLMs made with llm-eval-simple.

Example Command

If you have TranslateBookWithLLM installed as a Python package and configured with a local LLM via Ollama, a command might look like this:

python translate.py --provider ollama --api_endpoint http://lcoalhost:11434/api/generate -sl English -tl Italian -i ../example.epub -o ../example-it.epub -m mistral-nemo

Conclusion

Translating books for free using LLMs is a rewarding challenge. By combining the right tools like TranslateBookWithLLM with efficient local models such as mistral-nemo, you can achieve great results without incurring costs. For even higher quality or faster processing, consider investing in more Colab credits or a premium API provider.

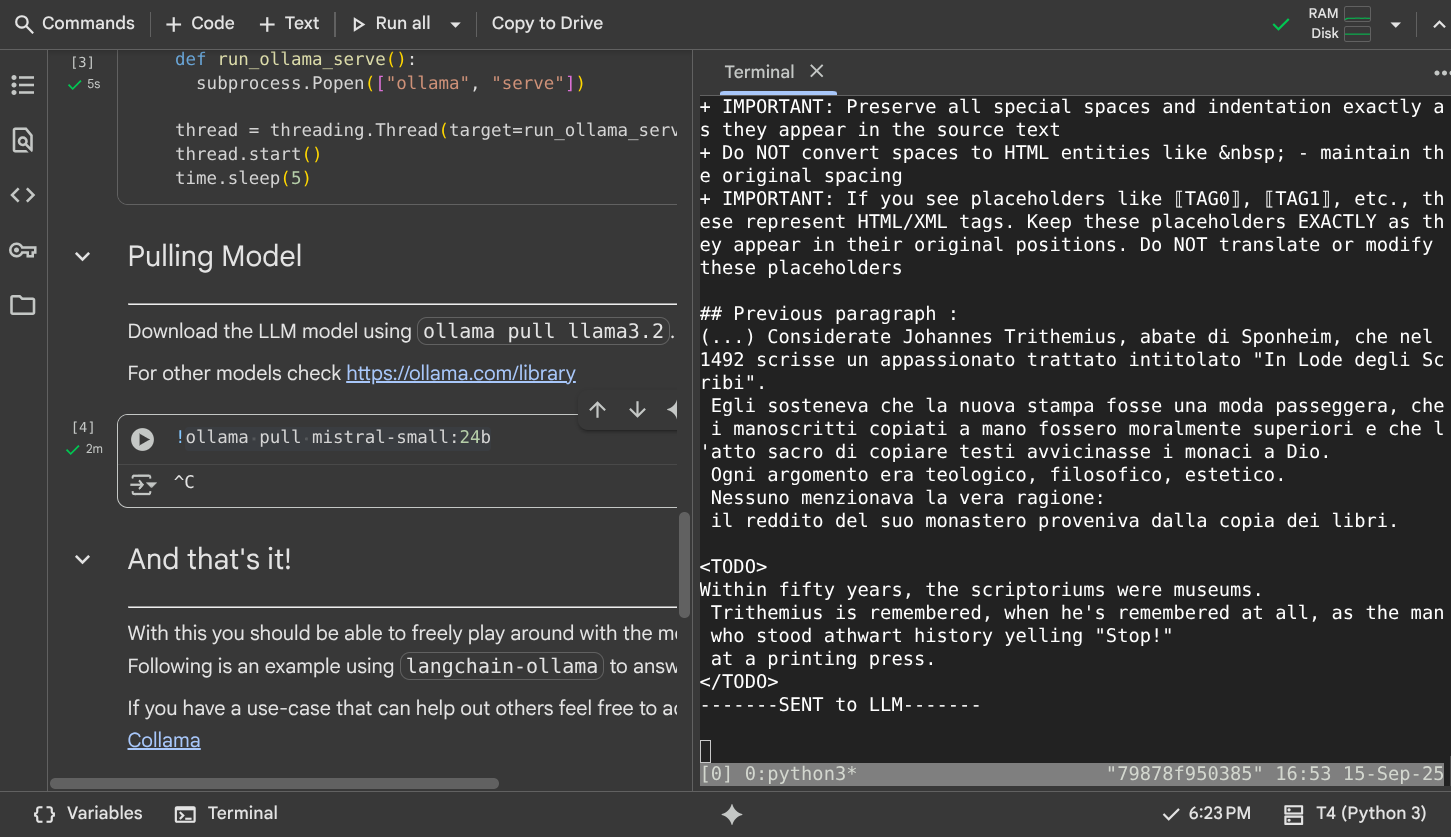

If you want to start your adventure, here is a Colab notebook with ollama and T4 gpu that you can use for free for 4h.