Finally, Stable Diffusion SDXL with ROCm acceleration and benchmarks

PROMPT: Joker fails to install ROCm to run Stable Diffusion SDXL, cinematic

AI is the future and I'd like to not depend on NVIDIA monopoly, I also do not need a GPU for gaming, so is AMD the alternative? Maybe, I wrote previously about it (in italian).

In this post I'd just like to write how ROCm support by AMD and the ecosystem python, pytorch,.. is a mess but it finally works! At least for my CPU / APU, AMD Ryzen 7 7700 glx1036 / 48Gb RAM @ 5200Mhz.

So will it also works for you? I don't know, there are too many moving parts.

Is your CPU / GPU / APU supported ?

The official AMD docs is improving but a few Linux distros are supported, the most useful website to check the compatibility is Salsa from Debian (which isn't an officially supported distro).

$ rocm_agent_enumerator

gfx000

gfx1036 # <-- THIS IS WHAT YOU NEED TO KNOW

Tell me about the "secret sauce" to make it work Stable Diffusion Web UI with ROCm

Use Docker and search for the errors, expect "segfaults", library incompatibilities, pinned dependencies..

Here is my special combo.

# Dockerfile.rocm

FROM rocm/dev-ubuntu-22.04

ENV DEBIAN_FRONTEND=noninteractive \

PYTHONUNBUFFERED=1 \

PYTHONIOENCODING=UTF-8

WORKDIR /sdtemp

RUN apt-get update &&\

apt-get install -y \

wget \

git \

python3 \

python3-pip \

python-is-python3

RUN python -m pip install --upgrade pip wheel

RUN git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui /sdtemp

ENV TORCH_COMMAND="pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/rocm5.6"

RUN python -m $TORCH_COMMAND

EXPOSE 7860

RUN python launch.py --skip-torch-cuda-test --exit

RUN python -m pip install opencv-python-headless

WORKDIR /stablediff-web

# docker-compose.yml

version: '3'

services:

stablediff-rocm:

build:

context: .

dockerfile: Dockerfile.rocm

container_name: stablediff-rocm-runner

environment:

TZ: "Europe/Rome"

ROC_ENABLE_PRE_VEGA: 1

COMMANDLINE_ARGS: "--listen --lowvram --no-half --skip-torch-cuda-test"

## IMPORTANT!

HSA_OVERRIDE_GFX_VERSION: "10.3.0"

ROCR_VISIBLE_DEVICES: 1

#PYTORCH_HIP_ALLOC_CONF: "garbage_collection_threshold:0.6,max_split_size_mb:128"

entrypoint: ["/bin/sh", "-c"]

command: >

"rocm-smi; . /stablediff.env; echo launch.py $$COMMANDLINE_ARGS;

if [ ! -d /stablediff-web/.git ]; then

cp -a /sdtemp/. /stablediff-web/

fi;

python launch.py"

ports:

- "7860:7860"

devices:

- "/dev/kfd:/dev/kfd"

- "/dev/dri:/dev/dri"

group_add:

- video

ipc: host

cap_add:

- SYS_PTRACE

security_opt:

- seccomp:unconfined

volumes:

- ./cache:/root/.cache

- ./stablediff.env:/stablediff.env

- ./stablediff-web:/stablediff-web

- ./stablediff-models:/stablediff-web/models/Stable-diffusion

$ docker-compose build -f stablediff-rocm

$ docker-compose up stablediff-rocm

The current stable ROCm 5.4 do not work here, you have to use ROCm 5.6 pre or Pytorch 1 instead of Pytorch 2, crazy.

Inspired by this discussion and a lot of debugging, the environment variables are very important set HSA_OVERRIDE_GFX_VERSION and ROCR_VISIBLE_DEVICES for your situation, while --lowvram is optional, it will make the generation a little bit slower but it will save you some RAM

Stable Diffusion CPU vs ROCm benchmarks

Is ROCm useful if you have a little of VRAM ?

Here is the test:

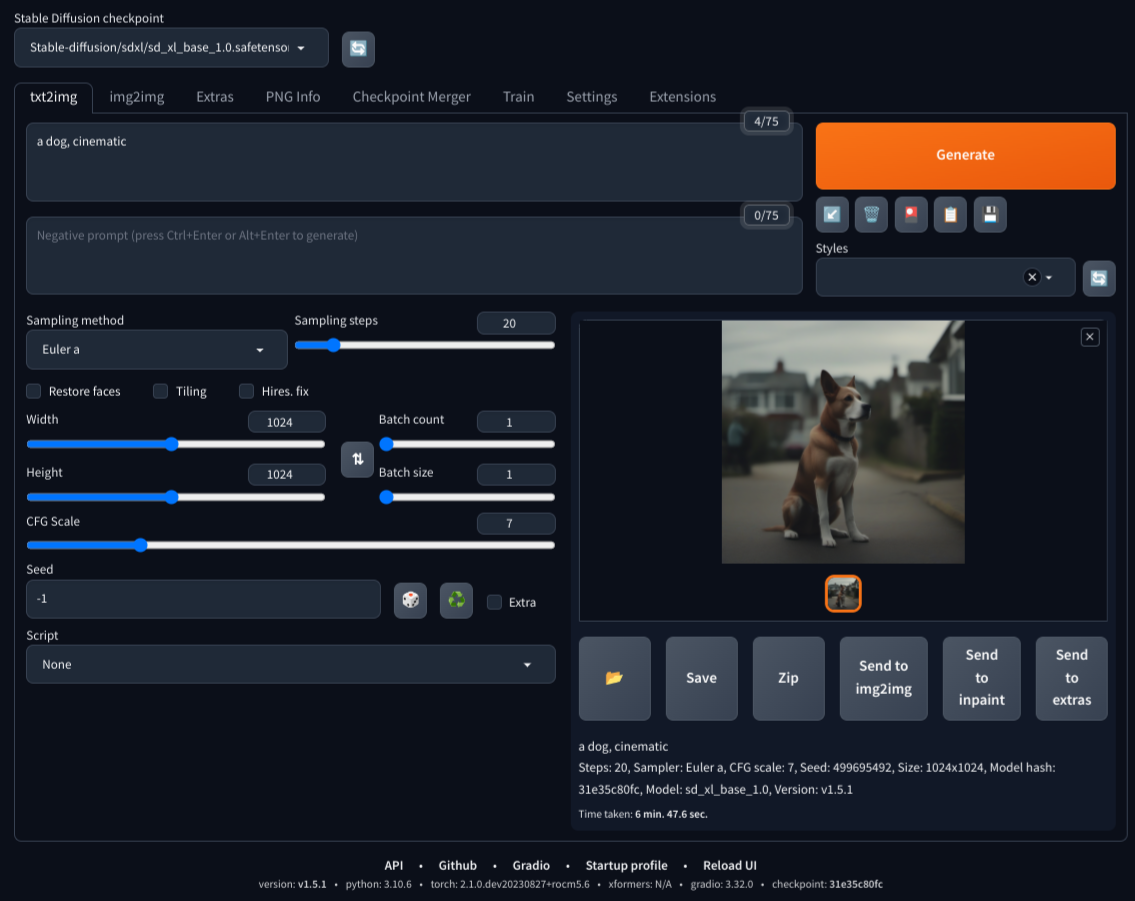

Debian 12 Linux 6.1 • SDWEBUI version: v1.5.1 • python: 3.10.6 • torch: 2.1.0.dev20230827+rocm5.6 • xformers: N/A • gradio: 3.32.0 • checkpoint: 31e35c80fc

Prompt: "a dog, cinematic"

Steps: 20 (default)

Sampling: Euler A (default)

Width: 1024 (SDXL at 512px is useless)

Height: 1024

# Results:

cpu sd1.5 1024 = 36.55s/it - RAM 18gb

cpu sdxl 1024 = 22.72s/it - RAM 36gb (+ swap?)

rocm sd1.5 1024 = 36.69s/it RAM 15gb (same of cpu)

rocm sdxl 1024 = 19.33s/it RAM 35gb (the best)

rocm sdxl 1024 lowvram = 20.27s/it RAM 33gb

Stable Diffusion SDXL ROCm is a little bit better than in CPU mode (6:47s vs 7:30s)

Conclusion

Currently ROCm is just a little bit faster than CPU on SDXL, but it will save you more RAM specially with --lowvram flag.

CPU mode is more compatible with the libraries and easier to make it work.

Stable Diffusion 1.5 is slower than SDXL at 1024 pixel an in general is better to use SDXL.

Stable Diffusion raccomand a GPU with 16Gb of VRAM for SDXL but it's nice to see that also cheaper and more general purpose hardware can run it. The GPU is faster than CPU/APU for AI but you can still run AI if you have at least 48Gb RAM, you just have to wait more time.